Introduction

LinkedIn has one of the most robust Site Reliability Engineering (SRE) practices around.

After all, as the social network of record for jobseekers and salespeople, it is the 6th most trafficked website in the world, with over 1.5 billion unique visits per month.

LinkedIn’s Site Reliability Engineers (SREs) ensure all that traffic gets served with minimal dropouts and performance degradation.

SRE efforts will only continue to grow, as the company has an ambitious goal to “create economic opportunity for every member of the global workforce”.

LinkedIn’s management aims for it to be more than an online resume and make an “economic graph”; similar to Facebook’s social graph. One that maps every aspect of the global economy – companies, jobs, schools, skills, etc.

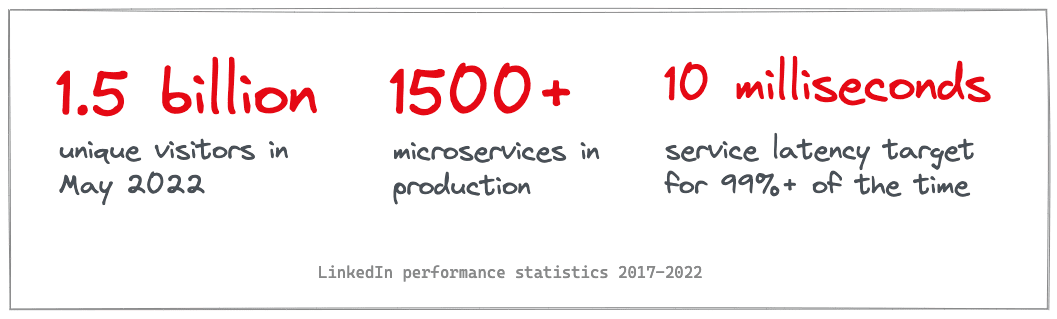

📊 Here are some performance statistics for LinkedIn

As of 2022, LinkedIn had:

- 850 million members

- Between 1.25 to 1.5 billion unique visits per month

- In May 2022, close to 1.5 billion unique global visitors had visited LinkedIn.com, up from 1.3 billion visitors in December 2021

- 39% (or 340 million) of users as Premium members

In 2017, LinkedIn had:

- 1500+ services in production

- 600 TB of stored data

- ranking of 8th busiest website in the world

- 100+ SREs supporting 1000+ SW engineers (ratio 10:1 makes sense)

- 20,000+ production machines in operations

- 300+ RESTful services

- performance targets of 10ms latency for 99% of services

- 100+ Kafka clusters handling 7 trillion messages per day

Each Site Reliability Engineer at LinkedIn is responsible for ~500 machines, but that can go up or down depending on the needs of the system on the day.

“It is impressive to be able to handle this kind of [work]. This contributes to the challenge of finding the right people. Finding SREs who can operate at this level is a very big challenge.”

— excerpt from video talk on SRE Hiring by Greg Leffler, SRE manager at LinkedIn from 2012-2016

LinkedIn’s SRE managers found that many SRE candidates sought work that would allow them to leave a legacy when they move on.

This trait correlates with the capability to work with broad-spanning complex systems in ambiguous circumstances.

If you can do out-of-the-box thinking and work in tough situations, you’d want that work to have a lasting impact.

🔱 How SRE fits into LinkedIn’s engineering culture

Team formation

- 300+ SREs across 4 global offices — Bay Area (San Francisco, Palo Alto), New York City, Bangalore

- Team size depends on whether they’re embedded in a product team or part of a dedicated SRE team

- SREs get to pick the kind of team they want to be part of

- Hybrid roles exist i.e. SREs who focus on systems or software development

Many SREs at LinkedIn as “embedded SREs”

In the mid-2010s, many SREs were distributed across product engineering teams i.e. embedded into the product team.

The reasoning behind this was that centralized teams eventually face issues that come with becoming a “shared service” i.e. not so critical to the day-to-day but only consulted if really necessary.

The centralized model would not have been conducive to LinkedIn’s SRE needs at the time. The thing to remember is that SRE is a continuously high-involvement practice and not only for when things go wrong.

LinkedIn has a large SRE base in India

LinkedIn’s engineering management focused strongly on creating SRE teams in India. This may be partly due to hiring difficulties endemic to the SRE field in North America and Europe.

There were initial difficulties as SRE had a stigma within talent pools in India.

Many with years of experience perceived SRE as a relabelled or glorified systems administration role. LinkedIn’s SRE hiring managers instead focused on turning junior engineers and graduates into high-potential SREs.

They emphasized from the outset that SRE was not a typical operations role and that it would involve broad-spanning work in ambiguous settings.

As of 2017, the Bangalore office of LinkedIn had 60 SREs in 10 teams.

History of SRE at LinkedIn

Prior to a modern SRE discipline (the early 2010s)

In 2013, LinkedIn rebranded teams in the existing discipline of AppOps to SRE. The newly minted SREs worked alongside stratified operations teams in verticals such as systems, networks, applications, and DBA.

This way of running software operations proved difficult as LinkedIn continued its growth trajectory. Several SREs noted issues like:

- Cooperation among these teams went only by way of tickets being sent to each other

- There were walls not only between dev and ops but within ops itself

- Developers did not have access to production and even most non-production environments – “hand me the code, and I will deploy it” so ops were bogged down with release operations

- Pager fatigue was extreme with operations people training significant others to keep an eye for alerts on the Blackberry while they slept (3 alerts every 5 minutes on average)

- Mon-Wed 6-10am outages due to capacity issues and little visibility into demand due to no observability instrumentation

- Dealing with outages that were so frequent, there was an outage every day of the calendar year

- MTTR was 1500 minutes, meaning issues were not getting resolved on the same day

SREs originally started off in this environment as firefighters but evolved during a drastic shift in the software operations at LinkedIn.

Transitioning to SRE from previous operational discipline

There was a need for change in the mid-2010s considering 100 million members relied on LinkedIn at the time.

LinkedIn could not mess up its increasingly complex software operations.

Something had to give, as LinkedIn was suffering frequent outages and performance issues despite now having an SRE team.

LinkedIn SREs and the wider organization had to challenge several antipatterns:

| Antipattern identifier | Antipattern description | Shift to these propatterns… |

| Firefighter 👨🏾🚒 | ❌ react to handle incidents that happen to keep the company functioning one more day | ✅ automate the manual work ✅ deliver instrumentation to get rich data on issues and alerts ✅ understand the stack at a deeper level |

| Gatekeeper 💂🏽 | ❌ control releases to protect the site from developers ❌ “talk to me if you want to touch production” ❌ push a button to deploy someone else’s work ❌ have arbitrary schedules for releases |

✅ develop automated gatekeepers to assure quality ✅ let developers own their work in production ✅ support developers in self-service deployment with the use of pre-launch checklists and release guidance |

The decision was then made across the organization to radically change several aspects of software operations. This included a drastic shift in software architecture and developer involvement in operations for the first time.

To achieve this lofty goal, product development was stopped for 3 months.

This signified a gutsy commitment to drive the necessary change.

Several factors supported the transition to more effective software operations:

- The transition received solid management support from all affected VPs

- The umbrella operations organization with sysadmins, network engineers, DB admins, etc. remained, but communication made it clear that it would not be business as usual

- Major changes to how the software was architected came with support from experienced SREs and other seasoned engineers

- Developers were increasingly involved in discussions about their ownership of software in production

How the change was actioned toward a modern SRE discipline

SREs initially advocated a few human-related practices to drive the change, including:

- encouraging and supporting developers in joining the on-call roster; some development teams got more into this, while others kept a distance from on-call

- implement DevOps, where developers are involved in operational thinking and behaviors around self-deploying code – “If it’s checked-in, it’s ready to go to production”

A few of the technical changes included:

- Move to a service-oriented architecture (SOA) and reduce dependence on monolithic artifacts

- Develop self-service portals for consuming metrics around services

- Enabling graceful degradation of services rather than 500 erroring the whole request load

- Uptake of distributed database model vs monolith databases to reduce SPOF (single point of failure) risk

- Switch from hardware to software-based load balancing

- Centralized branch model to simplify the commit process compared to feature branches

- Implemented multiple testing methodologies like pre-commit, PCS, and PCL

- Develop an auto-remediation system to replace manual network operations center (NOC) processes — resulting in a career transition program for traditional operations roles into SRE

- helping developers run A/B tests to ensure backward compatibility of their commit before users would see it

- support self-service deployment with a canary push to production to ensure stability before rollout to the entire cluster

Here’s a quick rundown of the cadence for self-service deployments for developers at LinkedIn:

- Canary to a single production instance

- Check the automated metrics-based validation of success

- Promote deployment to a single production data center

- Promote deployment to remaining production centers

- Ramp to an increasingly growing member base

This self-service model has supported 15,000 commits and 600+ feature ramp-ups per day

Since the self-service model is now well entrenched in the LinkedIn culture, SREs continue their work on various other problems. They work in areas like performance, platforms, resilience, architecture, etc.

They use observability to inform them with data to develop and implement tools that scale the LinkedIn application in production.

In order to create these tools, SREs often use computer science skills and apply the scientific method, statistical analysis, and implement machine learning models.

What is LinkedIn’s SRE culture like?

3 key principles

Ben Purgason, Director of SRE at LinkedIn from 2017-2018, summarized LinkedIn’s SRE principles as:

- Site up

- Empower developer ownership

- Operations is an engineering problem

Let’s explore each of these 3 principles in further detail:

- “Site up” is a simple catchphrase with the aim of making every engineer and system think of reliability as much as possible

- “Empower developer ownership” asserts that developers own their work end-to-end, not just in the development phase

- “Operations is an engineering problem” aims to involve engineering prowess in solving issues, not just push-button solutions

Hiring culture at LinkedIn

Hiring culture at LinkedIn SRE aims for a good “culture fit”. This is a common practice among many modern tech companies.

However, there is an important distinction in LinkedIn SRE’s definition of culture fit.

Greg Leffler says, “Don’t just hire someone you’d want to hang out with after hours or on the weekend, or they’ve won hackathons or went to elite schools, but someone who can do the job”.

After all, Site Reliability Engineering is a unique line of work where success calls for more than past accolades and social prowess.

Enable everyone to self-service their needs

As the LinkedIn member base grew, more services were added, and developer demand for hands-on support intensified. The need for a self-service approach became apparent as operations teams became stretched thin.

Over the years, LinkedIn SREs have developed several self-service tools and portals.

In particular, they focused on helping teams consume metrics around their specific service. Such metrics allowed teams to benchmark their service against other services.

This also allowed SREs to show teams if their service consumed outsized resources and needed to be optimized.

Fight the “hero worship” mentality

A long-lived philosophy in the software operations space has been to perform acts of heroics to rescue systems from critical failures e.g. “I survived going through a 36-hour war room to solve an ops crisis”.

LinkedIn’s change moved engineers away from that mindset. They have been steered to aim for proactive solutions that prevent the need for war rooms and heroic efforts in the first place.

Hunt the problems without a straightforward fix

Ben Pergason believed that it was important for their SREs to hunt problems that don’t have a straightforward fix.

The same went for problems that fit into the “too hard” basket for regular or old-school operations mindsets.

An example would be Ben’s “Every day is Monday” story about resolving a Python issue that had to be traced across all systems running the compiler. Let’s get into the details…

The fix was not a straightforward triage and took 750 engineer hours of time. However, the ROI was worth the effort. The fix saved close to 233 hours per day that would have been wasted in managing deployment errors.

The initial issue seemed from a distance to be a minor hindrance for some engineers. But the gravity of the situation grew fast as more systems and dependencies were found to be affected.

This error was because of a change made that affected a subsystem in an unexpected way.

“The high-profile failures get dealt with immediately (roll back the change), but the minor ones, like an integration test with a 1% increased chance of failure, often slip through the cracks. These minor problems build on top of each other, resulting in a major problem with no obvious single cause. Regardless of the failure type, it is critical that we be aware of changes that occurred around the same time.”

— Ben Pergason, Director of SRE at LinkedIn from 2017 to 2018

Parting words

Countless issues happen in software systems all the time. They are often too peculiar or opaque for day-to-day teams to power through, especially if they have less proactive mindsets.

Truth is that systems are constantly changing, and someone needs to be able to navigate this. They do not have to know everything but investigate, experiment, and find solutions through unpaved paths.

As you have read above, the Site Reliability Engineers at LinkedIn are now well-equipped to handle tricky situations.